A/B testing is one of the most effective ways to optimize your marketing strategy, improve conversion rates, and gain valuable insights into what resonates with your audience. However, even small mistakes can lead to misleading results, wasted resources, or missed opportunities. In this blog, we will explore the most common A/B testing mistakes, how they may impact your results, and how you can avoid them.

Mistake #1: Not Having a Clear Hypothesis

The first mistake we’ll consider is testing without a clear hypothesis. A hypothesis gives your test a purpose and helps you measure success effectively. Without defining what you are testing and why you are testing it, it may be difficult to know if you are heading in the right direction.

If you don’t start with a defined and clear hypothesis, your A/B test will be affected in many ways. Firstly, without a predefined expectation, you may read too much into meaningless fluctuations in your data which could lead to false conclusions. Your test may also lack focus because instead of testing a clear variable with an intended outcome, you might tweak multiple elements without understanding what is driving changes in performance. Additionally, running tests without a structured approach can lead to inconclusive results - wasting time and effort. Finally, without a hypothesis, decisions may be based on gut feelings rather than data-driven insights, reducing the effectiveness of optimization efforts.

How to Avoid This Mistake

1. Before Starting a Test, Clearly Define Your Hypothesis: Decide what it is you are trying to improve and develop a hypothesis that clearly states the expected outcome. A strong hypothesis provides direction and helps you make data-driven decisions.

For a step-by-step guide on crafting impactful hypotheses that drive meaningful A/B test results, check out our blog: Crafting Impactful Hypotheses for A/B Testing

Here is an example from the blog:

Scenario: We’re an affordable apparel brand and our primary goal is to increase AOV. After reviewing our order history data we realize that the current AOV is below $50, but we offer free shipping on orders over $50. We also notice that there is a significant number of customers abandoning checkout which is the first time our shipping fees are displayed.

Hypothesis: If we add messaging regarding the free shipping threshold to the cart, then we anticipate a rise in the average order value and an uptick in the overall revenue conversion rate, because more customers will see the free shipping incentive and be encouraged to add more items to their cart to qualify for the offer.

This structured approach ensures your test has a clear goal, making it easier to analyze results and implement meaningful changes.

2. Base Your Hypothesis on Data: Using analytics to understand user behavior will help to identify potential areas of improvement before you form your hypothesis. It defines a clear problem to solve. For example, if you have noticed a higher bounce rate on a page than normal, you might test a more engaging headline or stronger call to action. Without data, you’re relying on gut feelings which can make it difficult to generate a clear and meaningful hypothesis. A hypothesis that is developed in actual user behavior makes it more likely for you to test something that impacts conversions.

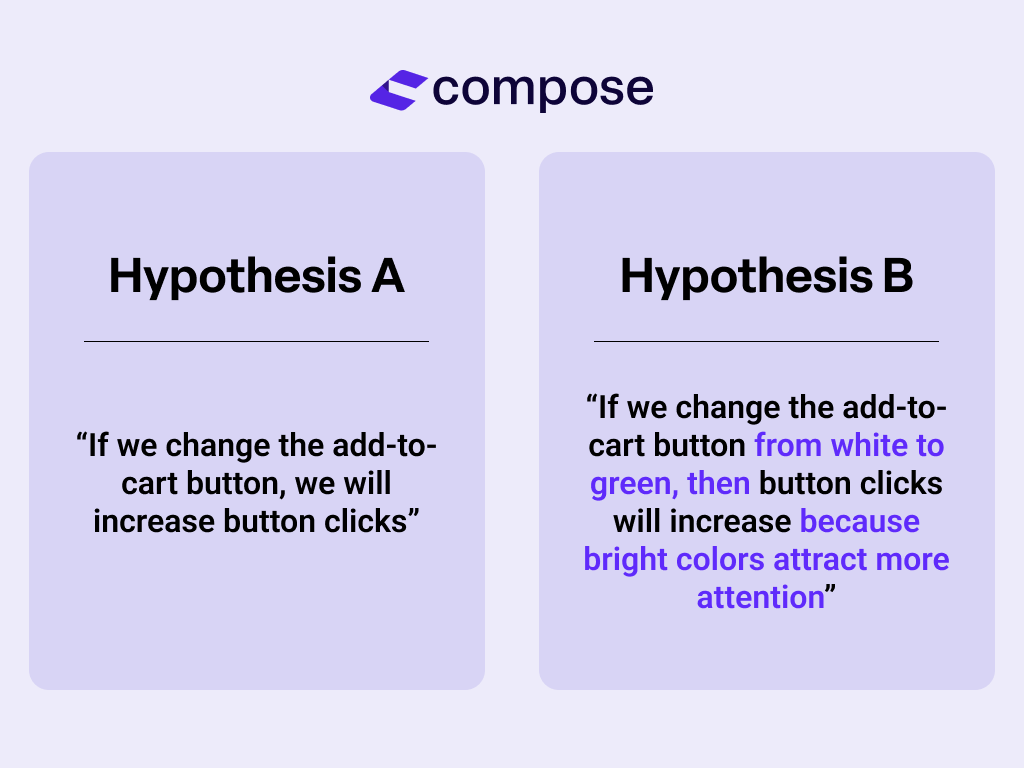

3. Keep Your Hypothesis Specific and Measurable: Ensuring your hypothesis is specific and measurable helps to create a structured test that generates clear insights. You should define the exact change being tested, the expected outcome, and the reasoning behind it. Below are two hypotheses–one vague, and one specific:

Hypothesis A lacks direction and clarity. It fails to answer what you are specifically changing about the add-to-cart button, why you are changing it, and what you are expecting from the change. By having a clear hypothesis that allows you to measure the results, you are able to track the results to understand if the test worked.

With Compose’s planning tools, you can develop and prioritize strong hypotheses, easily link them to any A/B test, and collaborate with your team. Plus, keep everyone aligned with a user-friendly roadmap outlining upcoming experiments for seamless execution.

Mistake #2: Running the Test Without Enough Users

A/B testing relies on statistical significance to ensure that the results are meaningful. A test run with too few users can create several potential issues. An increased risk of false positives or false negatives is a likely result of an experiment ended before enough users were tested.

For example, a company tests two CTA buttons and the yellow button gets 20 clicks while the purple button gets 30. They conclude that the purple button is better after converting only 50 people and immediately implement the change for all users. Over time, conversions may drop because the results of their experiment weren’t statistically significant.

With a small sample size, you reduce accuracy and increase randomness which can lead to poor decisions. Ensuring you have a sufficient number of users in your experiment helps to draw reliable, data-driven insights that will actually improve performance.

How to Avoid This Mistake

1. Use a Sample Size Calculator: A sample size calculator ensures that you test with enough users to get accurate results, know how long the test will take based on your website traffic, avoid stopping too early, and recognize when a test isn’t feasible due to low traffic. By calculating the right number of users before beginning your test, you make reliable decisions instead of depending on incomplete or misleading test results. For a deeper dive into how sample size affects your results, out blog, Minimizing Margin of Error for A/B Testing: 4 Easy Steps, goes in depth on how to determine the right sample size, the errors associated with too small a sample, and how to avoid type I and type II errors.

2. Run Tests Long Enough: Running an A/B test long enough ensures you collect enough data to make reliable decisions. If you stop a test too early, you risk acting on random fluctuations instead of meaningful insights. This also accounts for differences in user behavior over time which can vary by day of the week or time of day. Don’t stop your tests too early just because the initial results look promising. Let the data stabilize and reach statistical significance to avoid deceptive conclusions.

Compose’s built-in statistical significance tracking ensures you run tests with enough users to generate reliable insights. Instead of guessing when to stop an experiment, Compose helps you monitor progress in real-time, preventing premature conclusions based on incomplete data. With the option to choose between Bayesian and Frequentist approaches, compose allows you to select the method that best aligns with your goals and traffic size. By integrating statistical significance checks directly into your workflow, Compose enables you to make data-driven decisions with confidence, ensuring your tests lead to meaningful improvements rather than misleading results. Ready to see it in action? Schedule a demo to learn more!

Mistake #3: Assuming One Test Applies to All Audiences

User behavior can vary significantly across different segments such as different demographics, different geographic locations, new vs. returning users, or mobile vs. desktop users. When you conduct an A/B test on one segment and implement a change based on your findings across multiple segments, it may lead to missed optimization opportunities or a decline in performance.

How to Avoid This Mistake

Segment Your Audiences: By segmenting your audience, you avoid the mistake of applying a one-size-fits-all conclusion and instead make decisions that are adjusted to specific user groups. It also allows you to see how different users interact with your website which can assist you in improving overall conversions for all audiences. You can either segment your audience before running the test or use Compose to retroactively filter results for different groups, such as mobile vs. desktop users, to uncover trends you might otherwise miss. Filtering results post-testing can be particularly valuable for identifying differences in how various audiences interact with your website, allowing you to make informed and targeted decisions.

For example, a company decides to test two versions of a checkout page and finds that overall, Version A performs better. However, when the audiences are segmented they find that mobile users prefer Version B’s simple layout while desktop users prefer Version A which provides more product details.. Without segmenting these two groups, the company might have incorrectly assumed that Version A is best for everyone which would negatively impact mobile user conversions.

Key Audience Segments to Consider

Device Type: Mobile, desktop, tablet

Traffic Source: Organic search, paid ads, email, social media

User type: New vs. returning users, first-time buyers vs. repeat customers

Demographics: Age, gender, location

Behavior: Cart abandoners vs. high-intent shoppers

With Compose’s audience segmentation feature, you can easily split tests among different user groups, such as mobile vs. desktop users, to uncover insights that would otherwise be missed. Instead of assuming one version works for all, Compose allows you to analyze test results by segment, ensuring you make data-driven optimizations tailored to specific audiences. This helps you improve conversions for every user type rather than relying on a one-size-fits-all approach.

Mistake #4: Testing Too Many Changes At Once

A common mistake in A/B testing is testing multiple changes simultaneously. While it may seem like a good idea to test several elements at once to see a bigger impact, this approach can create more confusion than clarity. When you test multiple changes, it becomes difficult to pinpoint which specific change is responsible for the observed result, making your conclusions unreliable.

Your results could be skewed or inconclusive. The most significant issue is that it can lead to mixed signals in your data. For example, if you change the color of a CTA button, the headline, and the layout of the page all at once and see an increase in conversions, you won't be able to tell which of these changes had the biggest impact.

How to Avoid This Mistake:

1. Test One Variable at a Time: To ensure accuracy, focus on testing one specific change per test. The change could be something as small as a CTA button color or as large as the entire page layout, but by isolating one variable, you can accurately measure its impact on performance.

2. Prioritize Key Elements: Rather than making multiple changes at once, identify the most important elements to test. Focus on areas that have the greatest potential to influence conversions. Starting with high-impact variables will allow you to learn quicker and move forward with data-backed decisions. Once you have tested one element and have established a clear winner, move on to testing the next most impactful change.

3. Sequence Your Tests: If you do have multiple changes you want to test, sequence them. Start with once change, analyze the results, then move to the next most important element. This approach will help you to avoid the confusion of testing too many changes at once while still making progress on the improvement of your conversion rates. By testing in sequence, you can track the overall impact of each change and implement those changes on your site based on clear, reliable insights.

4. Use Multivariate Testing for Complex Tests: If you want to test multiple elements together to understand their relationship with one another, consider multivariate testing rather than traditional A/B testing. Multivariate testing allows you to test combinations of multiple variables at once but with a controlled approach.

5. Define Accurate Triggers for Your Tests: If your experiment only affects a specific audience (for example, users landing on a particular page), make sure your trigger conditions accurately reflect this. Testing all site traffic for an experiment that only impacts a small group can pollute your sample and lead to inaccurate results. Compose offers advanced trigger conditions including unique JavaScript triggers and Shopify preset triggers, to help you accurately target the right users and improve the reliability of your test results.

Mistake #5: Ignoring Micro Conversions

Focusing solely on macro conversions such as final purchases and ignoring micro conversions such as clicks on a product page, you may miss key insights into user behavior that could help you improve overall conversions. Micro conversions are important indicators of user intent and engagement, and neglecting them can lead to missed opportunities for optimization. They are often precursors to larger conversions. Ignoring them means you’re only looking at the final result, not the smaller steps that led to them.

How to Avoid This Mistake:

1. Track Micro Conversions: Start by identifying the small but significant actions that users take on their journey. These could include clicks, form completions, or engagement with specific features. Micro conversions are particularly important in longer or more complex sales cycles, where the final conversion might take several conversions. Tracking these micro-conversions allows you to spot trends early and adjust your strategy before users drop off completely. For example, if you notice that users frequently abandon their cart before checking out, focusing on improving the cart page could help increase both micro conversions and the final purchase rate.

2. Use Micro Conversions to Measure Progress: Micro conversions are milestones that indicate progress toward the ultimate goal. By measuring success in terms of both macro and micro conversions, you can get a fuller picture of the user experience and how small improvements can lead to bigger wins.

3. Focus on the Entire Conversion Funnel: Instead of looking at only the final conversion, take the time to analyze the entire funnel. Consider the stages users pass through, and optimize each one. If a user adds an item to their cart but doesn’t complete their purchase, identify where the friction points are and address those. Small improvements at each stage of the conversion funnel can lead to larger overall conversions.

Compose automatically tracks key micro conversions, such as engagement metrics and bounce rate, giving you real-time insights into user behavior beyond just final purchases. When setting up an experiment, you can also input custom goals to track specific actions that matter to your business—whether it’s clicks on a CTA, form completions, or time spent on a page. This ensures you capture both macro and micro conversions, helping you make informed optimizations at every stage of the user journey for better overall performance.

Mistake #6: Not Accounting for External Factors

External factors, such as the season, holidays, market trends, or even changes in the economic environment can significantly impact user behavior and affect the outcome of your test. Ignoring these factors can lead to misleading conclusions and impact your ability to accurately measure the changes you’re testing. For example, running an A/B test during a major shopping event like Black Friday could yield different results than if the same test was conducted during a slow season. Similarly, external factors like changes in social media trends or competitor actions can all influence user behavior making it difficult to isolate the true impact of your test changes.

How to Avoid This Mistake:

1. Consider the Season and Time of Year: Before running an A/B test, take into account the time of year and how it might affect user behavior. For example, retail sites might see a spike in traffic and sales during the holiday season, while B2B companies might experience a drop in engagement over the summer months. Running tests without considering these seasonal trends could lead to inaccurate results because traffic behavior may not represent typical patterns. Align your testing periods with more stable times of the year or account for fluctuations in your analysis of your test.

2. Monitor External Influences like Holidays and Events: Be mindful of external events or holidays that might affect user activity. Users may behave differently during a national holiday or economic downturn. These factors can cause spikes or dips in user behavior, so it is essential to track these events and consider their potential impact on your test results.

3. Conduct Tests Over a Sufficient Duration: To minimize the impact of fluctuations caused by external factors, ensure that your tests run long enough to capture enough data to account for the variability. If you’re testing during a time when external factors could influence behavior, extend the duration of your test to allow more data to come in. This helps to provide a more accurate picture of the impact your changes have on user behavior.

4. Use Historical Data for Context: Before starting an A/B test, review historical data to identify any recurring external events that could impact your results. This includes analyzing trends from past years, significant dates, or events that led to changes in user behavior. By understanding how external factors have impacted your site in the past, you can better interpret the results of your tests and adjust your strategies as needed.

Conclusion

A/B testing can be significantly helpful in optimizing your website and enhancing user experience, however, even small mistakes can lead to misleading results. By being mindful of common A/B testing mistakes, you can increase the reliability of your test results and make decisions that improve your performance.

Ready to take your conversion rate optimization to the next level? Schedule a demo to learn how Compose can fit into your strategy and help you make smarter, data-backed decisions.